Machine Learning 101

What is Machine Learning?

Machine learning is essentially the art of teaching computers to learn from data, much like humans learn from experience. Instead of giving a computer step-by-step instructions for every task, we provide it with large datasets and algorithms that allow it to identify patterns, make decisions, and improve over time without explicit programming. This approach has transformed numerous industries, enabling advancements in areas like image and speech recognition, recommendation systems, and even complex game strategies where machines can outperform human experts.

History of Machine Learning

The journey of machine learning dates back to the mid-20th century, marked by significant milestones that have shaped its evolution. The term "machine learning" was coined in 1959 by Arthur Samuel, a pioneer in the field, who defined it as the ability for computers to learn without being explicitly programmed. Early pioneers like Alan Turing also contributed foundational ideas, envisioning machines that could mimic human learning processes. In the 1950s and 60s, researchers developed foundational algorithms such as the perceptron, an early neural network model designed to recognize patterns. However, progress was slow due to limited computing power and data availability.

It wasn't until the 1990s and 2000s that machine learning truly took off, driven by the explosion of digital data and the advent of more powerful computing resources. Breakthroughs like support vector machines, decision trees, and ensemble methods expanded the capabilities of machine learning, allowing for more complex and accurate models. The introduction of big data technologies further accelerated progress, enabling the handling of vast datasets that were previously impractical to process. The deep learning revolution in the 2010s, spearheaded by scientists like Geoffrey Hinton and Yann LeCun, marked a significant leap forward. Deep learning architectures, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have led to innovations such as autonomous vehicles, sophisticated virtual assistants, and advanced image and speech recognition systems. Key milestones also include the development of backpropagation for training neural networks in the 1980s and the widespread adoption of machine learning techniques across various industries in recent decades.

How Does Machine Learning Fit into the Broader AI?

When people think of AI today, chances are they are mostly thinking of machine learning and its impressive applications. Machine learning provides the methodologies and tools to achieve AI by enabling machines to learn from data and improve their performance over time. It acts as the engine that powers various AI applications, from natural language processing and computer vision to robotics and predictive analytics. By automating the learning process, machine learning allows AI systems to adapt to new situations and data, making them more flexible and effective in real-world applications. This widespread recognition of machine learning as a core aspect of AI highlights its pivotal role in advancing intelligent technologies.

How is Machine Learning Different From Traditional Programming?

programming has evolved dramatically. Once dominated by explicit instructions that machines had to follow, we are now transitioning into an era where machines are taught to learn, adapt, and even infer rules on their own. This shift is best understood by comparing Traditional Programming with Machine Learning—both powerful, but fundamentally different approaches.

Traditional Programming: Rules Come First

In traditional programming, the workflow is straightforward and precise. The process begins by writing explicit rules—sets of instructions for the machine to follow. These rules dictate how to process data and produce desired answers. In this approach, humans need to fully understand the problem and its solution to manually encode the rules. The machine's job is to execute these predefined instructions.

For example, if you’re creating a program that sorts a list of numbers, you'd specify step-by-step instructions for sorting, and the machine would follow them exactly to give the sorted list as the output. Here, no learning happens—it's all about repetition of defined steps.

Key characteristics of traditional programming:

Explicit Rules: All instructions are predefined by human programmers.

No Learning: The machine doesn't adapt or learn from data; it merely executes instructions.

Predictable Behavior: The same input will always yield the same output based on the given rules.

While traditional programming works well for well-defined tasks, it becomes incredibly complex when trying to solve problems that require pattern recognition, prediction, or decision-making in ambiguous environments.

Machine Learning: Learning from Data

Machine Learning takes a fundamentally different approach. Instead of writing specific rules, the machine is provided with data and expected answers. The machine’s task is to deduce the underlying rules or patterns from this data on its own. Essentially, the machine learns from examples and can apply this learning to make predictions on new, unseen data.

For example, in image recognition, instead of explicitly telling the machine how to recognize a cat, you provide it with thousands of labeled images (some of cats, some not). Over time, the machine learns the common patterns among the images labeled as “cats” and creates a model to identify future images of cats.

Key characteristics of machine learning:

Implicit Rules: Instead of pre-defining every step, the machine infers patterns from the data.

Learning and Adaptation: The machine continually improves its model as more data becomes available.

Dynamic Behavior: Outputs can evolve as the machine is exposed to more or new data.

At this point, it’s essential to understand that machine learning can happen in different forms, depending on how the machine is taught to learn from the data:

Supervised Learning: In this approach, the machine is provided with labeled data, where each example in the dataset has a known output (like labeled images of cats). The machine learns by identifying patterns between inputs and their corresponding outputs, and it uses this knowledge to make predictions on new data.

Unsupervised Learning: Here, the machine is given data without explicit labels or expected outputs. The goal is for the machine to identify hidden patterns or structures within the data on its own (e.g., clustering similar data points together).

Reinforcement Learning: This method teaches machines to make decisions by interacting with an environment. The machine is rewarded for good actions and penalized for bad ones, and over time, it learns to maximize its cumulative rewards through trial and error.

The Key Difference: Source of Rules

In traditional programming, humans define the rules. In machine learning, the machine figures out the rules based on the data it’s trained on. This is a seismic shift because it opens up possibilities for solving complex problems that are difficult, if not impossible, to define explicitly through programming.

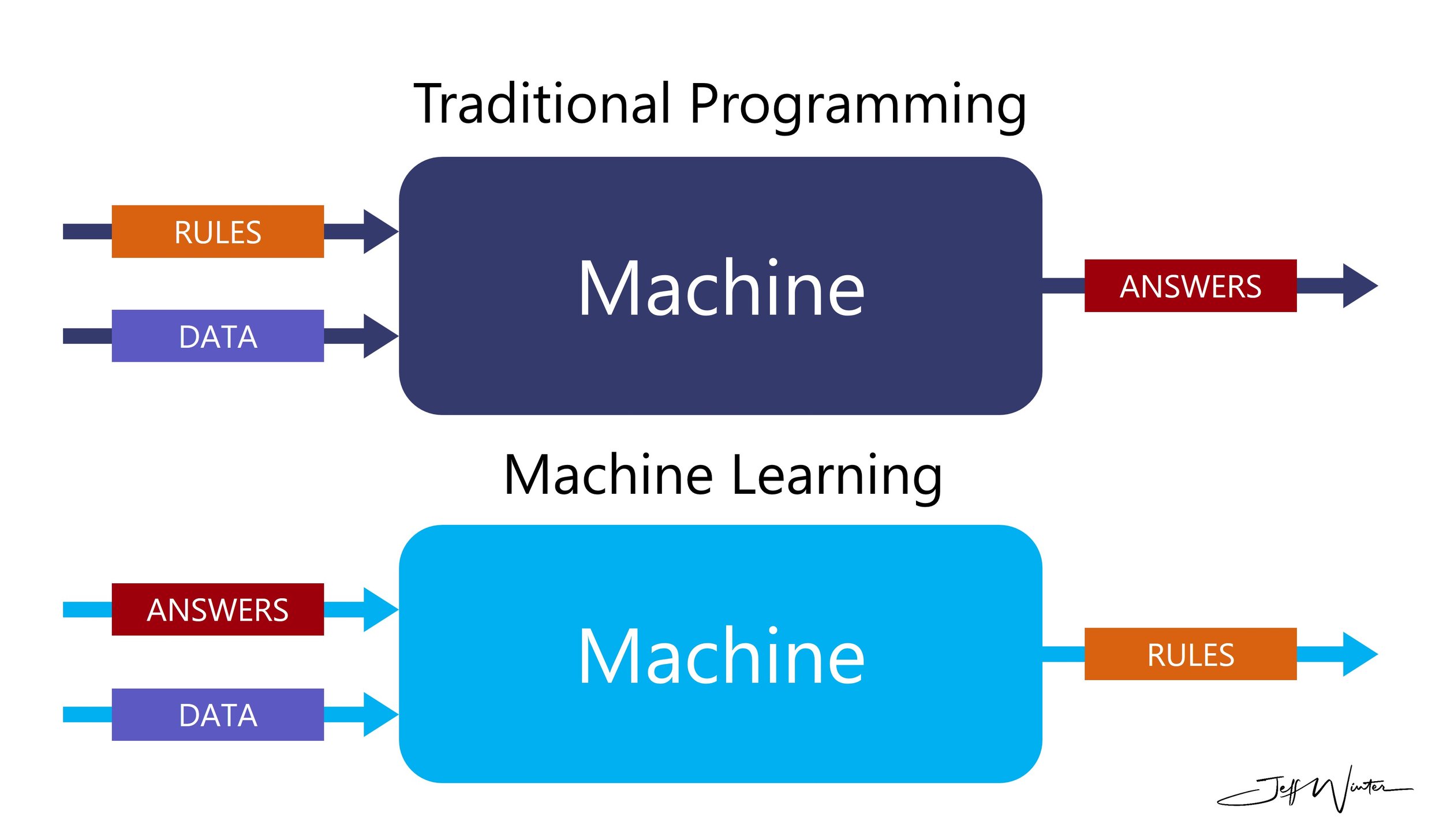

The image above illustrates this beautifully:

In traditional programming, rules and data go into the machine, and the answers are spit out.

In Machine Learning, it’s flipped: data and answers are given to the machine, which learns the rules to apply on new data..

Why the Shift Matters

As industries continue to evolve, especially those facing highly variable environments like manufacturing, finance, and healthcare, the traditional approach is reaching its limits. Machine learning enables solutions that can handle ambiguity, make predictions, and adapt over time—attributes that are becoming crucial in today’s fast-paced, data-driven world.

In essence, traditional programming is about control, while machine learning is about discovery. Machines that once needed to be explicitly told what to do can now learn to interpret the world on their own, unlocking the next frontier of technological advancement.

How is Machine Learning Different From Statistics?

While statistics and machine learning often overlap, they serve distinct purposes and are applied in different ways. Let's explore the main distinctions in a more structured way, though still with a touch of fun.

Statistics is primarily focused on inference. It’s like the detective of data analysis—looking at a sample of data to draw conclusions about the larger population. For instance, a manufacturing company might use a statistical approach to reduce defects in their products by using models like logistic regression to identify factors that significantly increase the chances of defects. The goal here is to understand which factors are influencing the outcome and to use that understanding to improve decision-making.

On the other hand, machine learning—specifically supervised learning—is all about prediction. Here, the model is trained on labeled data (where the correct outcomes are known) and learns to predict outcomes on new, unseen data. So in the same manufacturing scenario, a machine learning model might be used to predict in real time whether a new batch of products will be defective based on patterns it learned from historical data. Machine learning techniques can range from decision trees to more complex models like random forests or neural networks, especially when dealing with non-linear relationships.

In addition to supervised learning, machine learning includes unsupervised learning, which isn’t focused on prediction but on discovering hidden patterns or groupings in data without predefined labels. It’s more about exploration and less about drawing traditional conclusions.

Key Differences

Inference vs. Prediction:

Statistics: The focus is on understanding relationships within the data and making inferences about the broader population. It’s about identifying the why and how behind observed outcomes.

Machine Learning: Primarily aims to predict future outcomes based on past data, especially when working with new or unseen data.

Techniques and Tools:

Statistics: Uses methods like logistic regression to find relationships between variables and outcomes.

Machine Learning: Goes beyond, employing models like decision trees, random forests, and neural networks to capture complex and non-linear patterns, drawing from various disciplines like calculus, linear algebra, and computer science.

Manufacturing example

In a manufacturing company that produces electronic components, the goal might be to reduce the rate of defective products and improve overall production quality. The statistical approach to achieving this would focus on understanding the underlying factors contributing to defects in the manufacturing process. For example, the company might use statistical models like logistic regression to identify significant factors that increase the likelihood of defects. By analyzing data, they could infer which parts of the production process are causing issues and make adjustments to improve quality.

In contrast, machine learning takes a different approach, particularly when dealing with large amounts of data and complex relationships. Instead of focusing on understanding specific contributing factors, the objective of machine learning is to predict whether new batches of products will be defective based on historical data. In this case, the company might use a decision tree classifier or more complex models like random forests or neural networks to capture non-linear relationships and interactions in the data. These models would allow the company to make real-time predictions on defects as products move through the production line, providing immediate insights into potential issues.

While this example highlights where statistics and machine learning could be easily confused, it’s important to note that the two are fundamentally different in their focus. Statistics is about understanding the relationships within the data, while machine learning is about using those relationships to make accurate predictions.

Machine Learning Tasks

Machine learning can be applied in various ways, but it generally falls into three main task categories: Regression, Classification, and Clustering. Each task type helps solve different kinds of problems, and manufacturers can use these approaches to optimize processes, improve quality, and reduce costs.

Regression: "How Many?"

Regression is used when you need to predict a continuous value. In simple terms, you're trying to answer a "how many" or "how much" type of question. For instance, in manufacturing, you might use regression to predict the number of defective items produced in a shift based on factors like temperature, machine wear, or input material quality. Another example could be forecasting production costs for a specific period or estimating the future demand for certain products based on historical sales data. Typical applications beyond manufacturing include things like sales forecasting or financial portfolio predictions.

Classification: "What Category?"

Classification is all about grouping items into predefined categories. In manufacturing, classification could be used to determine whether a product is "good" or "defective" during quality control checks. For example, machine learning models could classify parts as either defective or non-defective based on data from sensors or cameras during production. Another practical use would be predicting whether certain machines are likely to fail soon based on their operating conditions and past behavior, helping you schedule preventive maintenance. Outside of manufacturing, classification could be seen in applications like facial recognition or predicting customer churn.

Clustering: "In What Group?"

Clustering is about grouping data points that are similar to each other, without predefined categories. This technique is particularly useful in discovering patterns. In a manufacturing context, clustering might be used to segment machinery or production lines based on usage patterns to optimize maintenance schedules. Or, it could be applied to segment products or customers by their features, which helps with product customization or market analysis. Clustering could also help segment consumer purchasing behavior or identify groups of products that tend to be ordered together, leading to better inventory management.

Machine Learning Generalization

Machine learning generalization refers to a model’s ability to perform well not just on the data it was trained on but also on new, unseen data. The goal is to build a model that captures the underlying patterns in the data rather than memorizing the training examples. Generalization is what makes a machine learning model valuable in real-world applications, as it can make accurate predictions on data it has never encountered before.

Why Generalization Matters:

Generalization is the key to building a model that works in the real world. A model that underfits won’t learn enough from the data to make meaningful predictions. Conversely, a model that overfits becomes overly tailored to the training data and performs poorly when new data is introduced. The goal is to build models that are flexible enough to capture important patterns but not so complex that they get bogged down in irrelevant details.

Underfitting:

Underfitting occurs when a model is too simple and fails to capture the true underlying patterns in the data. In regression, an underfitting model might create a straight line that doesn't represent the more complex relationship between the input features and the target. In classification, the decision boundary may be too basic, failing to distinguish between different categories accurately. This means the model has not learned enough from the data, leading to poor performance both on the training set and on unseen data.

Improving Underfitting

Increase Model Complexity: Use a more complex model with additional parameters, such as moving from linear regression to polynomial regression or using a deeper neural network.

Add More Features: Incorporate additional relevant features to provide the model with more information and better capture underlying patterns.

Decrease Regularization: Reduce the strength of regularization techniques (like L1 or L2 regularization) to allow the model to fit the training data more closely.

Enhance Feature Engineering: Create new features or transform existing ones to better represent the data and improve the model’s ability to learn.

Train Longer: Allow the model to train for more epochs or iterations to enable it to learn the data more thoroughly.

Use a Different Algorithm: Experiment with different algorithms that might be better suited to the data and problem at hand.

Overfitting:

Overfitting happens when a model becomes too complex and learns not just the patterns but also the noise in the data. In regression, this results in a convoluted line that fits every tiny fluctuation in the training data. In classification, the decision boundary wraps too tightly around every data point, making the model overly specific to the training set. While this leads to high performance on the training data, the model struggles to generalize to new data, as it essentially "memorized" the training set rather than learning true patterns.

Improving Overfitting:

Apply Regularization: Use regularization methods such as L1 (Lasso) or L2 (Ridge) to penalize large coefficients and reduce model complexity.

Simplify the Model: Reduce the number of parameters or choose a simpler model to prevent it from capturing noise in the training data.

Gather More Training Data: Increase the size of the training dataset to help the model generalize better to new, unseen data.

Use Data Augmentation: Expand the training dataset by creating modified versions of existing data (e.g., rotating or flipping images) to improve model robustness.

Implement Early Stopping: Monitor the model’s performance on a validation set and stop training when performance starts to decline, preventing the model from overfitting the training data.

Employ Cross-Validation: Use cross-validation techniques to ensure the model performs well across different subsets of the data, enhancing its generalization ability.

Prune the Model: Remove unnecessary weights or nodes in the model to reduce complexity and improve generalization.

Ensemble Methods: Combine multiple models (e.g., bagging, boosting) to reduce variance and improve overall performance.

Just Right:

A well-generalized model achieves the perfect balance. In regression, the model fits the data appropriately, capturing the key trends without becoming overly complex. For classification, the decision boundary correctly separates categories with high accuracy. This is the "Goldilocks" zone of machine learning, where the model is neither too simple nor too complex. It generalizes well and is likely to perform effectively on new data, making reliable predictions.

What Manufacturing Leaders Should Know About Machine Learning

For manufacturing business leaders just getting acquainted with machine learning (ML), it’s important to grasp the bare minimum technical concepts to effectively steer your teams. At the very least, understand what data preprocessing, model training, and evaluation means. Think of data preprocessing as cleaning up your workshop before starting a project—without it, your ML models can’t perform well. Model training is like teaching your team the best ways to handle tasks, and evaluation is assessing their performance to ensure everything runs smoothly. Knowing these basics allows you to have meaningful conversations with your technical teams, ask the right questions, and make informed decisions without needing to dive into the nitty-gritty details yourself.

From a business perspective, it's essential to understand how ML can align with and drive your strategic goals. Focus on identifying areas where ML can provide tangible benefits, such as predictive maintenance to reduce downtime, quality control to minimize defects, or optimizing supply chains for better cost efficiency. My MBA coursework highlighted the importance of data quality and the right technological investments, which are the backbone of any successful ML project. Additionally, keeping an eye on the return on investment (ROI) and staying updated with the latest ML trends helps in assessing opportunities and setting realistic expectations for your teams. Just like my MBA studies gave me enough insight to understand what data scientists and ML specialists were discussing, your goal should be to acquire enough knowledge to lead confidently without diving deep into the technical weeds. This balanced approach ensures you can support your teams effectively, drive innovation, and maintain a competitive edge in the manufacturing landscape—all while leaving the model training to the experts.

References:

Samuel, Arthur (1959). "Some Studies in Machine Learning Using the Game of Checkers". IBM Journal of Research and Development. 3 (3): 210–229. CiteSeerX 10.1.1.368.2254. doi:10.1147/rd.33.0210. S2CID 2126705

Dataversity - Foote, Keith (2021) - A Brief History of Machine Learning: https://www.dataversity.net/a-brief-history-of-machine-learning/

Machine Learning Mastery - Brownlee, Jason (2019) - Overfitting and Underfiling With Machine Learning Algorithms: https://machinelearningmastery.com/overfitting-and-underfitting-with-machine-learning-algorithms/