Where Does ChatGPT Fit in the Field of AI?

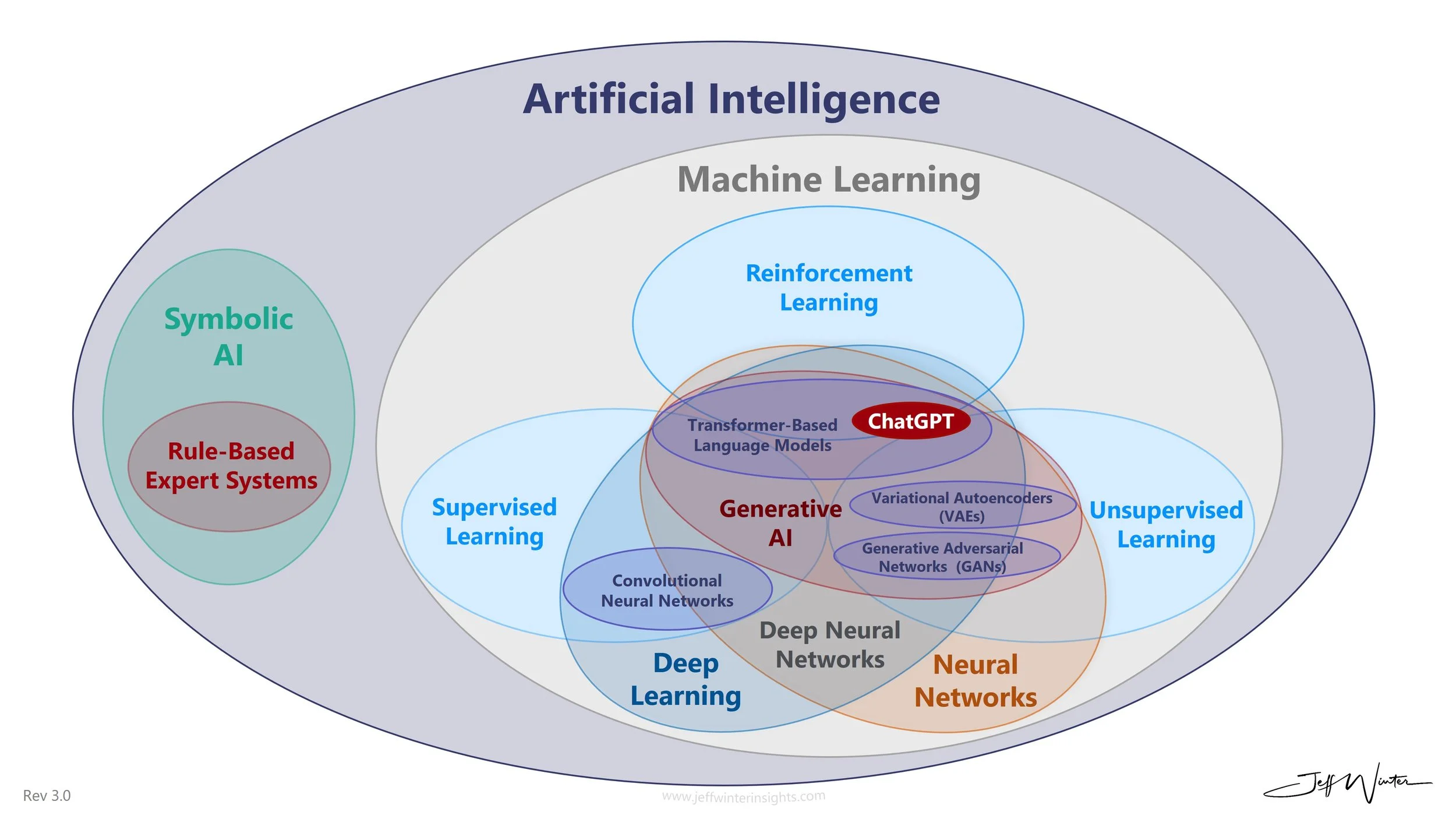

There is no universally agreed-upon definition or delineation in most of the subfields of Artificial Intelligence as most sources classify the domains in different, albeit similar ways.

In the period spanning late 2022 to early 2023, the rise of consumer-facing generative AI tools marked a significant shift in how both the public and enterprises perceived AI's potential. Although discussions about generative AI's capabilities started with the introduction of GPT-2 in 2019, its full promise only became palpable to businesses recently.

I created my best attempt at a taxonomy that represents the most widely accepted understanding of each AI field. I consider this “Rev 3” and will modify/expand on it in the future as I learn more.

Artificial Intelligence (AI): The Umbrella Term

Artificial Intelligence (AI) is the science and engineering of creating machines or software capable of performing tasks that typically require human intelligence. These tasks include reasoning, learning, problem-solving, understanding natural language, and even creativity. AI's history dates back to the mid-20th century, beginning with Alan Turing's revolutionary concept of a "universal Turing machine," which introduced the idea of machines processing and learning from data. Over the decades, AI has grown into a diverse field, with innovations driving technologies that now shape industries and everyday life.

AI’s development can be broadly divided into two main branches: Symbolic AI and Machine Learning.

Symbolic AI

Symbolic AI, the earlier of the two branches, emerged in the 1950s and 1960s. It relies on explicit programming of rules and logic to simulate human reasoning. These systems, often called rule-based systems or expert systems, use "if-then" statements to make decisions. Symbolic AI was foundational for early breakthroughs like the Logic Theorist (1956), which proved mathematical theorems, and MYCIN (1970s), which diagnosed medical conditions. While symbolic AI is effective for solving well-defined problems, its rigidity and inability to handle ambiguity or adapt to new information limited its applications, paving the way for new approaches.

Machine Learning

Machine Learning (ML), the second branch, arose in the 1980s and 1990s as a response to Symbolic AI's limitations. Instead of relying on predefined rules, ML algorithms learn patterns and make predictions from data. Inspired by the human brain, neural networks became a cornerstone of ML, with early models like the Perceptron (1957) laying the groundwork for modern deep learning. ML has enabled AI to excel in tasks like image recognition, speech processing, and autonomous decision-making, far surpassing the capabilities of symbolic AI in handling complex and unstructured problems.

While symbolic AI and machine learning differ in their methodologies, they remain complementary. Symbolic AI offers deterministic precision, while machine learning provides adaptability and scalability. Together, these branches form the backbone of modern AI systems, enabling advancements in robotics, natural language processing, and generative AI like ChatGPT.

Supervised Learning

Supervised learning is the most widely used type of ML, relying on labeled datasets to train models. The goal is to learn a mapping between inputs (e.g., sensor readings) and outputs (e.g., machine status).

How it Works: The algorithm is trained on input-output pairs. For example, in a predictive maintenance scenario, inputs could be sensor data like vibration levels or temperature, while outputs are labels such as "normal" or "failure." The model adjusts its parameters to reduce errors during training and can then predict outcomes on new, unseen data.

Industrial Applications:

Predictive Maintenance: Models analyze sensor data to predict when equipment might fail, reducing unplanned downtime.

Quality Control: Supervised learning detects defects on production lines by training models on labeled images of acceptable and defective products.

Supply Chain Forecasting: Algorithms predict demand based on historical sales data, enabling better inventory planning.

Challenges: Supervised learning requires large amounts of labeled data, which can be expensive and time-consuming to collect in manufacturing environments. Additionally, it may struggle with entirely new failure modes not seen during training.

Unsupervised Learning

Unsupervised learning uncovers patterns, relationships, or structures in unlabeled data. It is particularly useful in manufacturing where operational data is abundant but not always labeled.

How it Works: The algorithm explores the data to group similar observations, identify outliers, or reduce data complexity. For instance, in manufacturing, it can group machines with similar usage patterns or detect unusual activity that might indicate inefficiencies or risks.

Industrial Applications:

Anomaly Detection: Identifying irregularities in equipment performance that might indicate emerging faults or inefficiencies.

Process Optimization: Grouping production parameters (e.g., temperature, pressure, speed) to identify optimal configurations for maximizing output or minimizing waste.

Customer Segmentation: For industrial suppliers, unsupervised learning groups customers based on purchasing behaviors, enabling tailored service or pricing strategies.

Key Techniques: Clustering algorithms like K-means or DBSCAN and dimensionality reduction techniques such as Principal Component Analysis (PCA).

Challenges: Without labels, interpreting the results requires domain expertise. For instance, identifying whether a detected anomaly represents a critical failure or a harmless fluctuation often needs human insight.

Reinforcement Learning (RL)

Reinforcement learning involves teaching an AI agent to make sequential decisions in a dynamic environment by rewarding desirable outcomes and penalizing undesirable ones. RL is particularly effective in manufacturing environments where the system needs to adapt to changing conditions.

How it Works: An agent interacts with the environment (e.g., a production line or robotic system) and learns a policy to maximize cumulative rewards. For example, in an assembly process, the agent could be rewarded for faster assembly times and penalized for errors.

Industrial Applications:

Production Line Optimization: RL algorithms adjust machine parameters in real-time to maximize efficiency or throughput, such as balancing workloads across multiple machines.

Energy Management: RL optimizes energy consumption in factories by learning to schedule operations during off-peak hours or adjust HVAC settings dynamically.

Autonomous Robots: RL is used to train robots for tasks like assembling products, sorting items, or navigating factory floors.

Key Techniques:

Q-Learning: A method for learning the value of actions in specific states, useful for discrete tasks like optimizing conveyor belt speeds.

Deep Reinforcement Learning: Combines neural networks with RL to handle high-dimensional inputs, such as video feeds in a manufacturing plant.

Challenges: RL requires extensive simulation or real-world trials, which can be resource-intensive. In manufacturing, mistakes during training (e.g., damaging equipment) can be costly or even dangerous.

Neural Networks and Deep Learning

Neural networks are computational models inspired by the structure and function of the human brain. These models are composed of interconnected layers of nodes, or "neurons," which process data by simulating how biological neurons transmit signals. Deep learning builds on neural networks by adding multiple layers, creating "deep" architectures capable of learning complex patterns and representations. Together, neural networks and deep learning are fundamental to many modern AI applications, driving advancements in industries such as manufacturing, healthcare, and finance.

Neural Networks: The Foundation

How They Work: Neural networks consist of layers of interconnected nodes (neurons). Each node applies a mathematical function to its inputs, passing the results to the next layer. Weights, adjusted during training via optimization techniques like backpropagation, determine the strength of these connections. Neural networks differ from general ML algorithms because they can automatically identify features without manual input from humans.

Industrial Applications:

Fault Diagnosis in Complex Systems: Neural networks analyze vast sensor data streams from interconnected systems (like turbines or CNC machines) to detect subtle, non-linear patterns indicative of faults. Unlike traditional ML, which may require handcrafted features, neural networks autonomously identify these patterns.

Dynamic Energy Management: Neural networks predict real-time energy needs across a factory by analyzing inputs like production schedules and environmental data, enabling dynamic adjustments.

Real-Time Supply Chain Routing: By analyzing constantly changing variables (e.g., weather, traffic, production delays), neural networks enable intelligent logistics routing decisions, adapting dynamically.

Key Techniques:

Feedforward Networks: Process data in one direction, suitable for static problems like fault classification.

Recurrent Neural Networks (RNNs): Designed for sequential data, such as time-series data from production logs.

Backpropagation: A method to iteratively adjust weights by minimizing prediction errors during training.

Challenges: Neural networks require large datasets, computational resources, and careful tuning to prevent overfitting, particularly in scenarios where data variability is high (e.g., real-time manufacturing systems).

Deep Learning: Scaling Complexity

Deep learning leverages neural networks with multiple layers to learn hierarchical representations of data. Unlike traditional neural networks, deep learning excels in high-dimensional and unstructured data like images, video, and audio. In industrial settings, it enables applications that were previously infeasible with simpler ML algorithms.

How It Works: Each layer in a deep learning model processes increasingly complex features. For instance, in a system analyzing video feeds from a factory floor, early layers might detect edges and shapes, while deeper layers identify machines, tools, or human operators. This allows deep learning to handle tasks requiring high-level abstractions.

Industrial Applications:

Industrial Process Simulations: Deep learning models simulate and optimize entire manufacturing workflows, such as testing different configurations in a virtual environment to maximize yield. Unlike traditional ML, these models can account for thousands of interdependent variables.

Natural Language Processing (NLP) for Manufacturing: Deep learning powers systems that analyze maintenance logs, operator notes, or customer feedback, extracting insights to improve processes or products. Unlike simpler ML models, it understands nuances in language and context.

Visual Inspection with Multiple Modalities: Deep learning integrates data from multiple sources (e.g., thermal cameras, X-rays, and visible light) for a more comprehensive analysis, which traditional ML might struggle to combine effectively.

Key Techniques:

Autoencoders: Learn efficient representations of data for tasks like anomaly detection in process simulations.

Generative Models: Used to create synthetic data for training models in scenarios where real-world data is sparse.

Transfer Learning: Pre-trained deep learning models are adapted for specific industrial tasks, significantly reducing training time.

Challenges: Deep learning models are resource-intensive, require vast datasets, and often operate as "black boxes," making it hard to interpret their decisions—an issue in industries requiring strict regulatory compliance.

Generative AI

Generative AI refers to algorithms capable of creating new data—be it images, text, music, or other forms of content—by learning patterns and distributions from existing data. Unlike traditional AI models that analyze or classify data, generative AI produces outputs that closely resemble the training data. This transformative capability drives innovation across industries, enabling applications from synthetic data generation to creative automation. The three main pillars of generative AI are Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and Transformer-Based Language Models.

Variational Autoencoders (VAEs)

VAEs are a type of generative AI model designed to learn the underlying structure of data by compressing it into a smaller, latent representation and reconstructing it. They use probabilistic approaches to generate new data samples that are similar to, but not identical to, the original data.

How They Work: VAEs consist of two main components:

Encoder: Compresses input data into a latent space representation (a reduced set of features that capture the data's essence).

Decoder: Reconstructs the original data from this latent representation. By sampling from the latent space, VAEs can generate new, synthetic data.

Unlike traditional autoencoders, VAEs treat the latent space probabilistically, introducing variability into the generated outputs.

Industrial Applications:

Material Design: VAEs are used to generate new molecular structures with desired properties, accelerating the discovery of advanced materials.

Fault Simulation in Manufacturing: By generating synthetic datasets of rare machine failure scenarios, VAEs allow models to train on events that are too infrequent to capture in real data.

Production Data Compression: VAEs compress large datasets (e.g., sensor data from IIoT devices) for efficient storage and transfer without significant loss of information.

Key Techniques:

Latent Variable Modeling: Encodes data into a continuous probability distribution.

Reparameterization Trick: Ensures smooth backpropagation by separating stochastic sampling from deterministic layers.

Bayesian Inference: Underpins the probabilistic nature of the latent space.

Challenges: VAEs struggle to generate highly detailed data compared to GANs and transformers. Their outputs can sometimes lack sharpness or precision, especially in visual or complex industrial data.

Generative Adversarial Networks (GANs)

GANs are a type of generative AI framework where two networks, a generator and a discriminator, compete against each other to create highly realistic data. The generator produces synthetic data, while the discriminator evaluates whether the data is real or fake, forcing the generator to improve over time.

How They Work:

The generator learns to produce synthetic outputs (e.g., images, sensor data) from random noise.

The discriminator distinguishes between real data and the generator’s outputs.

The two networks are trained together in an adversarial process, where the generator’s goal is to fool the discriminator, and the discriminator’s goal is to identify fake data accurately.

Industrial Applications:

Synthetic Defect Creation for Quality Control: GANs generate images of defective products to train quality control systems on a broader range of scenarios, even those rarely seen in production.

3D Model Generation: GANs create realistic 3D designs of parts or tools, streamlining prototyping and simulation.

Energy Usage Optimization: GANs simulate energy consumption data under varying production scenarios, helping optimize energy-intensive processes.

Key Techniques:

Wasserstein GANs (WGANs): Improve training stability by using a smoother loss function.

Conditional GANs (cGANs): Generate data conditioned on specific inputs, such as creating images of parts with specific dimensions.

CycleGANs: Transform one type of data into another, such as converting hand-drawn designs into CAD models.

Challenges: GANs are notoriously difficult to train due to instability in the adversarial process. Problems like mode collapse (where the generator produces limited varieties of outputs) can occur. Additionally, training GANs often requires significant computational resources.

Transformer-Based Language Models

Transformer-based language models, such as GPT (Generative Pre-trained Transformer), are the foundation of modern natural language processing (NLP). They use an attention mechanism to process and generate text, understanding context at a level that mimics human language fluency.

How They Work:

Transformers use self-attention mechanisms to capture complex dependencies within textual data, enabling them to generate contextually relevant outputs. These models are pre-trained on large text corpora, learning patterns, and structures that represent the grammar, syntax, and semantics of a language. Once trained, transformer-based models can be fine-tuned for various NLP tasks, including text generation, where they generate coherent and contextually relevant text based on a given input or prompt. In models like GPT, the decoder-only structure focuses purely on text generation.

The self-attention mechanism enables the model to consider the importance of each word in relation to others, capturing context and meaning across entire sentences or documents.

Models are pre-trained on massive datasets and fine-tuned for specific tasks, making them highly versatile.

Industrial Applications:

Maintenance Log Analysis: Transformers analyze unstructured maintenance logs to identify recurring issues or predict future failures.

Operator Training: Generate training manuals or procedural documentation tailored to specific machines or operations in natural language.

Dynamic Workflow Automation: Create conversational AI systems that assist operators in real-time, suggesting machine settings or troubleshooting steps.

Manufacturing Chatbots: Provide support for factory floor personnel by answering questions about processes or troubleshooting based on equipment manuals.

Key Techniques:

Attention Mechanism: Determines the importance of each word in a sequence relative to others, ensuring coherent and context-aware text generation.

Positional Encoding: Adds sequence information to word embeddings, enabling the model to understand word order and context.

Transfer Learning: Pre-trained models like GPT are fine-tuned for specific industrial use cases, reducing training costs and time.

Challenges: Transformer-based models require significant computational resources and large datasets for training. They also face challenges with interpretability, and their reliance on training data can lead to biases or inaccuracies if the data is incomplete or skewed.

AI is a vast and ever-evolving field, encompassing many other domains not mentioned here, such as robotics, evolutionary computing, knowledge representation, and multi-agent systems. These fields often overlap, blending methodologies and influencing each other to drive innovation. For example, advancements in natural language processing can enhance robotics, while techniques from reinforcement learning are applied in multi-agent systems. The boundaries between these fields are fluid, reflecting AI's dynamic nature as it adapts to new challenges and discoveries. This overview offers just a snapshot of AI at a moment in time, as the field continues to grow, redefine itself, and push the limits of what machines can achieve.

References:

Encyclopedia Britannica - B.J. Copeland - History of ARtifical Intelligence, 2024: https://www.britannica.com/science/history-of-artificial-intelligence/Connectionism

Data Science Dojo - Zainab Arshad - Exploring the Applications of Neural Networks in 7 Different Industries, 2024: https://datasciencedojo.com/blog/applications-of-neural-networks/